The project aims at investigating how intentions of a robot can be understood by humans through anticipatory path selection in combination with iconic and verbal communication, so that the discomfort due to uncertainty regarding the actions of a robot is minimized.

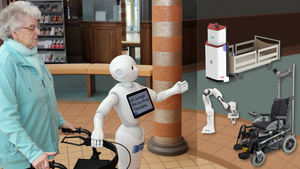

Annual growth rates of more than 10% in robot installations demonstrate the increasing importance of robots, although most of them are industrial robots that are almost completely isolated from humans behind safety fences. On the other hand, close contact is omnipresent in autonomous service robots for home use or nursing care. Up to now, little attention has been paid to the optimal design of communication between robot and patient, nursing staff or third parties during episodic encounters during autonomous movement in the sense of the optimal choice of communication mode (non-verbal kinematic, non-verbal iconic, verbal). The aim of the project is to develop intuitive non-verbal and informative verbal forms of communication between robots and humans, which can be transferred to very different application domains of robots in the service sector with direct human-robot interaction, using the application in a rehabilitation environment as an example.

Different modalities must be integrated in order to achieve communication behaviour. For this purpose, methods are developed that combine visual and auditory perceptions to form coherent inputs and select and synchronize suitable output modalities. Solutions for the following problem areas are developed to realize the automatic movements for automatic robotic systems in the application environment: First, reliable and robust localization and navigation to deal with variable numbers of people and occlusions. Secondly, driving models that result in movements that people can anticipate - possibly combined with verbal and iconic modalities. In order to use verbal communication, we investigate how relationships between humans and robots can be established, maintained and resolved appropriately. This includes, in particular, dialogues about intentions that are not clearly recognizable by movement patterns or iconic.

Partners

- EK AUTOMATION, Reutlingen (Koordinator)

- GESTALT Robotics GmbH, Berlin

- HFC Human-Factors-Consult GmbH, Berlin

- DFKI GmbH