Marlon Nuske was interviewed by Eliane Eisenring of Trivadis

Mr Nuske, there are currently around 28,210 pieces of “space junk” in Earth orbit – all large enough to damage or even destroy an active satellite. Is that as threatening as it sounds?

At first glance, you might actually think: we have thousands of satellites in space, what's the problem if one gets wrecked? What makes the situation considerably more serious, however, is the chain reaction that experts fear. A collision with space debris would not only destroy a single satellite. The debris flying away from this satellite in the collision could damage other satellites, from which debris would in turn fall off. This would pose the risk of an entire area of space becoming essentially unflyable.

How can artificial intelligence (AI) help?

For one, AI can help us predict when and where there is a risk of collision. That would be the first step in collision prevention: using the data on the positions and speeds of the satellites, we determine their trajectories. Based on this, a conventionally programmed algorithm calculates potential collision points. This is where we come in: With the help of an AI analysis of the database of all possible collision points, we want to calculate the risk of a collision more precisely. Because only the high-risk collisions are subsequently assessed by experts. A better automated assessment can therefore help to reduce the number of collisions that are assessed manually and at the same time prevent high-risk collisions from being overlooked.

In the event of a collision with space debris, there is a risk that an entire area of space would become essentially unflyable.

That would be the first step in collision avoidance – how could AI be used in further steps?

As I said, in the next step, humans manually look at high risk collisions. Then they calculate different paths, determine what kind of evasive manoeuvre could be flown and initiate it if necessary. AI could also help here, for example by determining automated evasive manoeuvres on satellites themselves. But then we are on a different level of safety than with just an initial pre-assessment of the risk.

Higher safety requirements make the automation of processes and the use of AI more and more delicate ...

That's true, thus, you always have to keep this issue in mind: The higher the risk you take, the more you have to keep an eye on things, of course, and understand how the AI works – keyword Explainable AI. For some applications, I think you can use AI without any problems and you don't have to worry too much if something goes wrong. For other tasks, it is worth considering whether AI is the best solution at all, or whether conventional algorithms are better suited. Hybrid algorithms can also help here. In other words, those that combine a conventional algorithm with an AI algorithm. For example, you can replace only those parts of the conventional algorithm where AI really offers an advantage.

Especially if you want to use AI directly in a satellite, it makes a big difference whether you can use the latest GPU graphics card or have to work with instruments that are more like the standard from five years ago.

What actually distinguishes the application of AI in space from that on Earth?

One challenge you generally have in space is that you are often several years behind the current state of the art. It takes an incredibly long time to get an instrument to the stage where you can send it into space. Then there are the various safety regulations. And especially when you are working with methods like AI and want to use them directly in a satellite or in a robot, it makes a big difference whether you can use the latest GPU graphics card or have to work with instruments that are more like the standard from five years ago.

Let's change the focus from satellites to satellite images of the Earth. How are these currently analysed and how could AI improve this process?

We are working on this as part of the AI4EO (Artificial Intelligence For Earth Observation) Solution Factory of ESA and DFKI. We are looking at what we can do with the data that satellites provide us with. Of course, the range of applications is huge: You can identify flooded areas, predict forest fires early on or analyse the development of green spaces in cities. Currently, there is a massive growth in this area, because since 2017 we have two satellites in orbit, Sentinel-2A and Sentinel-2B, through which we get an image of every place on Earth every five days, which is made freely available. Particularly with such a large amount of data, it is of course an enormous advantage to be able to do automated image analysis with the help of AI.

By analysing satellite images, you can identify flooded areas, predict forest fires early on or analyse the development of green spaces in cities.

What specific application are you currently working on in connection with satellite images?

We are currently involved in a use case that is about making forecasts for agricultural yields. For this, we use satellite images of the corresponding fields as well as data sources that provide information on soil quality or weather. To be able to estimate the yield at the end of the season, you have to combine these different data.

Certainly not easy on an operational level.

That's true. The data formats differ greatly in terms of spatial and temporal resolution. Satellite images, as I said, you get about every five days, with a resolution of 10 metres per pixel. With digital elevation models, the spatial resolution is 30 metres per pixel, but you only get one image, i.e. only one data point – after all, the altitude of an area hardly changes over time. With weather data, on the other hand, you have hardly any spatial resolution – you don't determine the weather for every single square metre in the field – but a very high temporal resolution. And for each individual data set, you have to consider: do I train two different machine learning models and merge them at the end, or do I want to merge the data beforehand and bring them into the same model?

In addition to the analysis of satellite images and collision prevention, you are also working on how AI methods can be used to create a "digital twin" of the Earth. What is that all about?

This is a project that is part of the so-called "European Green Deal" and the "Destination Earth" project based on it. The aim is to develop a digital model of the entire Earth. The first areas of application that are foreseen are therefore strongly oriented towards climate protection. With a digital image of the Earth that is as accurate as possible, it is possible to make predictions for the future, as we are already doing to some extent with the help of climate models. We try to answer questions like: How will the climate develop? How would it develop if we were suddenly able to stop emitting greenhouse gases? Or if we simply continued as before? What we are specifically focusing on at the DFKI is where AI can play a role in making these predictions and which parts of models should be replaced with machine learning.

Such predictions and answers to "what if" scenarios would help political decisionmakers assess the consequences of their actions.

Digital twins – don't they also exist in industry?

They do, it is in fact very common to create a digital model of a production process in order to simulate it and improve it if necessary. And of course, I can transfer the same to the entire Earth, but it is correspondingly more challenging to bring the different data into the same format. Especially for a model of the Earth, researchers have already produced an incredible number of models from different fields, but they often exist separately from each other.

So the challenge is to couple a model that I have for the atmosphere with a model that I have for the oceans. Then you could calculate much more specific things. For example: How does the air quality in Germany develop if we plant more trees in the country? And what effects will that have on our climate overall? Such predictions and answers to "what if" scenarios would help political decision-makers to assess the consequences of their actions.

DIGITAL TWINS IN BUSINESS

A digital twin is the virtual image of a product or process fed with real data. Realistic modelling can help companies monitor and improve products or processes. In automotive development, for example, engineers test load scenarios for individual components or entire vehicles, up to and including virtual crash tests. The Italian sports car manufacturer Maserati has almost halved the development time of its vehicles in this way. Through networking with machine learning, a digital twin could even provide automated feedback to its physical counterpart – so that both together ultimately become a self-controlling system.

At ESA_Lab@DFKI, researchers work directly with employees from industry. What are the advantages of this collaboration?

In the so-called "TransferLabs" of the DFKI, our experts for AI can work directly with the specialists for the respective application area on a solution for a specific project. For us, direct exchange is important to make sure we don't miss out on developing what is actually needed in industry. In return, the companies benefit from our proximity to basic research, which time and again provides important findings to take projects a decisive step further.

Do you have an example of this?

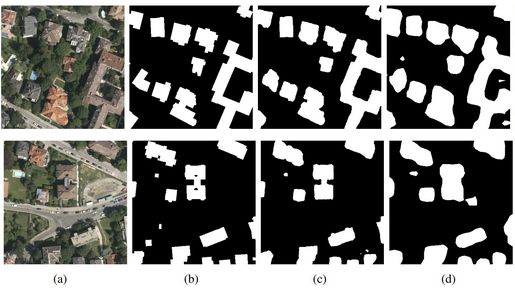

There is a current project that involves recognising the ground plans of buildings based on satellite images and with the help of an algorithm. Until now, the problem has been formulated as: "Is a certain pixel placed in a building or not?" On this basis, a neural network, an FCN (Fully Convolutional Network), has been given satellite images to assess whether or not it is a building (see Figure 1, (d)).

Now, however, basic research has found that recognition works much better if, instead of this task, you ask the question: "What is the distance of a certain pixel from the outline of the building?" This way, you can give each pixel a number that represents the distance to the outer edge of the building. By doing this, I give the so-called multitasking network more ways to discriminate and it can determine with higher accuracy whether something is a building or not – just by formulating the task differently (see Figure 1, (c)).

Finally, the million-dollar question: When will the first completely AI-controlled space mission take place?

My colleagues who work in the field of robotics in space could say a lot more about that (laughs). DFKI is very active in the field of unmanned autonomous systems and their use in hostile environments, including space. Recently, for example, an underwater robot was used to test long-range navigation for exploring ice seas, such as on Jupiter's moon Europa.

It's definitely a very dynamic development and I'm already looking forward to seeing what technological innovations artificial intelligence will bring us in the next ten, twenty or even fifty years.

ABOUT MARLON NUSKE

Dr Marlon Nuske (*1992) has been a senior researcher at the German Research Center for Artificial Intelligence (DFKI) in Kaiserslautern since 2021. Among other things, he leads the AI4EO Solution Factory, which uses machine learning to analyse Earth observation data, and conducts research on ML-based satellite collision prediction. Nuske has a PhD in physics from the University of Hamburg.