Foundation models are being used more and more in critical areas. However, they exhibit memorization effects in relation to their training data. To better understand this phenomenon and develop countermeasures, researchers from DFKI Darmstadt organised a workshop titled 'The Impact of Memorization on Trustworthy Foundation Models' at the 42nd International Conference on Machine Learning (ICML). The conference took place in Vancouver from 13 to 19 July.

Foundation models have been integrated into many different domains. They even support work in high-stakes areas such as healthcare, public safety and education. As they become more important, so does their safety. Research suggests that foundation models may exhibit unintended memorization. This can result in the leakage of sensitive personal data, copyrighted content, and confidential or proprietary information.

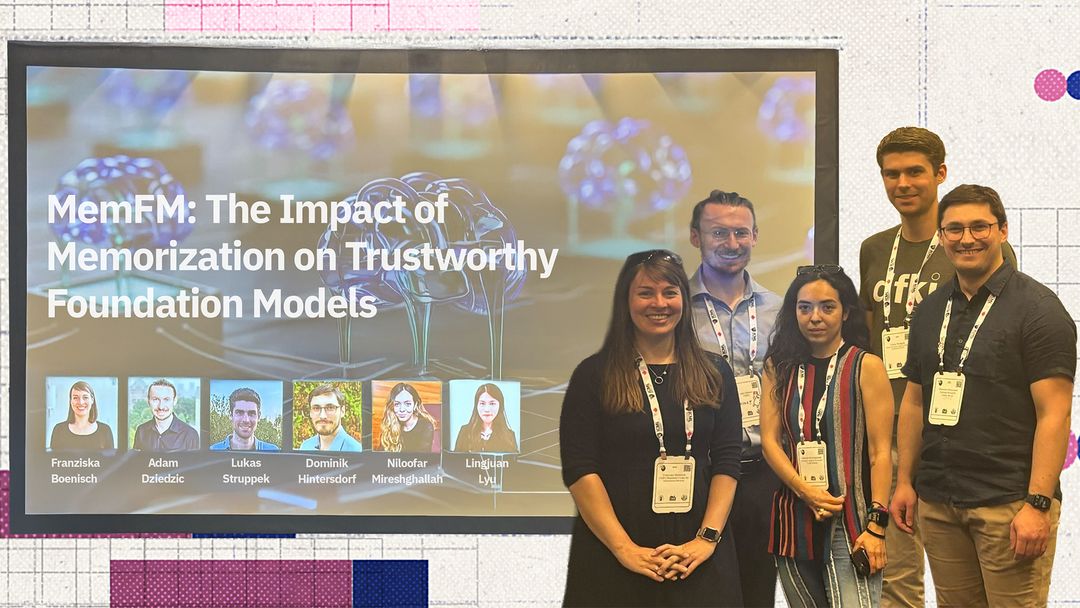

To promote research exchange in this field, Dominik Hintersdorf and Lukas Struppek, from the 'Foundations of Systemic AI' department at DFKI in Darmstadt, organised a workshop titled 'The Impact of Memorization on Trustworthy Foundation Models' at ICML, alongside other researchers. During the workshop, the challenges posed by memorization effects were examined from various perspectives, and the causes, consequences, and possible measures were discussed. The aim was to support the development of foundation models that benefit society without endangering privacy, intellectual property or public trust.

Hintersdorf and Struppek and their co-organizators Adam Dziedzic (CISPA Helmholtz Center for Information Security), Niloofar Mireshghallah,(FAIR/CMU), Lingjuan Lyu (Sony AI) and Franziska Boenisch (CISPA Helmholtz Center for Information Security) could win speakers like Kamalika Chaudhuri (USCD/Meta), A. Feder Cooper (Yale University/Microsoft), Vitaly Feldman (Apple), Pratyush Maini (Carnegie Mellon University), Reza Shokri (National University of Singapore). Amy Beth Cyphert (West Virginia Univiersity College of Law), Casey Meehan (OpenAI) and Om Thakkar (OpenAI) participated in a panel discussion. The workshop was sponsored by CISPA Helmholtz Center for Information Security.

You can find more information about DFKI's presence at the ICML here: https://dfki.de/en/web/news/icml-2025-deceptive-explainability-in-ai-systems