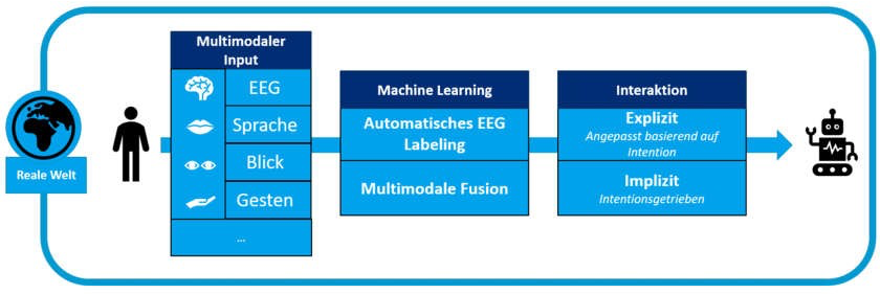

In the newly started project, the research departments Robotics Innovation Center and Cognitive Assistants are developing an adaptive, self-learning platform that enables various types of active interaction and is able to derive human intention from gestures, speech, eye movements and brain activity. The project kick-off took place on July 20, 2020 as a virtual event.

DFKI CEO Prof. Dr. Antonio Krüger, Head of Cognitive Assistants and project leader: “In EXPECT, DFKI is bundling competencies and expertise from the fields of cognitive assistants and robotics at its Saarbrücken and Bremen sites. In this way, we are creating a cornerstone for further application-oriented projects based on research into the integration of EEG data in hybrid brain-computer interfaces”.

DFKI is one of the pioneers in the mobile multimodal use of EEG data for interaction with robotic systems. “In the future, AI systems will support humans not only in restricted areas. They will rather act like personal assistants. In order to fulfil this task, it is mandatory that they understand the human being, derive his intentions and provide context-sensitive support. Brain data open up the possibility to give the robot a better direct insight into human intentions. An important application field is, for example, the rehabilitation after a stroke,” says Dr. Elsa Kirchner, project manager for the Robotics Innovation Center.

One of the greatest challenges in human-robot collaboration (HRC) is the natural interaction. In the field of human-computer interaction (HCI), the word “natural” does not describe the interface to the interaction itself, but rather the process of the user learning the various interaction principles of the interface. This learning process should be as easy as possible for the user, based on known (natural) interaction principles such as voice commands, gestures and the like.

This kind of interaction is of great importance in the field of human-robot collaboration. It enables the collaboration to be as efficient as possible and helps to reduce the human collaborator's fear of contact and general distrust of robot systems in everyday work. EXPECT thus has a direct positive influence on the increasing collaboration between humans and robots in the working world.

In order to make this possible, the project develops methods for automated identification and joint analysis of human multimodal data and evaluates them in test scenarios. The systematic experiments also serve the investigation of the question how fundamentally important brain data are for predicting intentions in humans.

Maurice Rekrut, project manager for the research department Cognitive Assistants: ”In the EXPECT project, we are investigating to what extent correlations between different modalities can be found in brain activity in order to make human-robot interaction more intuitive and natural. On one hand we want to design purely brain-computer interface-based interaction concepts and on the other hand we want to create multimodal concepts to provide the best interaction possibilities in every situation”.

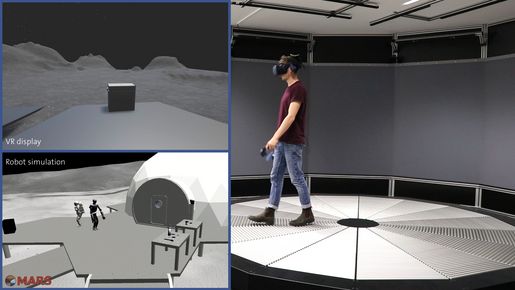

The technologies to be developed in EXPECT can be used in a wide range of applications – for example in space travel and extraterrestrial exploration, robot-supported rehabilitation and assistance as well as in Industry 4.0 and industrial human robot collaboration.

Project information

EXPECT is funded by the Federal Ministry of Education and Research (BMBF) within the R&D framework “IKT 2020 – Forschung für Innovationen” under the funding code 01IW20003 with a volume of 1.6 million Euro over a period of four years.

Further information

https://www.dfki.de/en/web/research/projects-and-publications/projects-overview/project/expect

Robots learn to understand humans – EXPECT research project launched

| Cognitive Assistants | Robotics Innovation Center | Saarbrücken | Bremen | Press release

Contact:

Prof. Dr. Antonio Krüger

- ceo@dfki.de

- Phone: +49 681 85775 5006

Prof. Dr. Elsa Andrea Kirchner

- Elsa.Kirchner@dfki.de

- Phone: +49 421 17845 4113

Dr.-Ing. Maurice Rekrut

- Maurice.Rekrut@dfki.de

- Phone: +49 681 85775 5137

Press contact:

Heike Leonhard, M.A.

- Heike.Leonhard@dfki.de

- Phone: +49 681 85775 5390