Trustworthy AI: The Bridge between Innovation and Acceptance

| Financial Sector | Health & Medicine | Industry 4.0 | Mobility | Smart Home & Assisted Living | Knowledge & Business Intelligence | IT Security | Machine Learning & Deep Learning | Human-Machine Interaction | Agents and Simulated Reality | Cyber-Physical Systems | Embedded Intelligence | Smart Data & Knowledge Services | Smart Enterprise Engineering

Trust as the foundation for a sustainable AI future

Trustworthy artificial intelligence is much more than a technological challenge – it is a prerequisite for the broad social acceptance and economic success of intelligent systems. There is a growing realisation in Germany and Europe that AI must not only be powerful, but also safe, comprehensible and ethically justifiable. To meet these requirements, companies, public institutions and political decision-makers are faced with the task of developing reliable standards and testing procedures that both meet these requirements and ensure the growth of innovative strength.

A crucial building block for trust in AI is transparency. Users must be able to understand how a system arrives at its decisions – especially in safety-critical or ethically sensitive areas such as medicine, finance or robotics. This is one focus of research at DFKI: Through interdisciplinary collaboration, test criteria, certification procedures and formal guarantees are developed that enable an objective evaluation of the reliability and fairness of AI models. Initiatives such as MISSION KI, CERTAIN and HumanE-AI.net, as well as the projects CRAI and VeryHuman, show how technological excellence and regulatory requirements can be combined in a practical setting.

MISSION KI: A certification framework

A concrete example of this approach is MISSION KI. The initiative, launched by the Federal Ministry of Digital and Transport, is dedicated to the challenge of strengthening the development of trustworthy AI systems. This is achieved by efficient testing standards for low- and high-risk AI systems, which translate abstract AI quality dimensions such as transparency and non-discrimination into concrete measurable values and thus into clearly defined testing criteria.

‘Especially in critical fields of application – for example in diagnostics or in autonomous vehicles – AI models must make comprehensible and verifiable decisions. This creates synergies between science and industry, which work together on the specification of test criteria, thus bridging the gap between technological development and regulatory practice.’

Within the framework of MISSION KI, DFKI contributes to the development and determination of trustworthiness, especially of high-risk systems. A hybrid approach is pursued to operationalise the abstract requirements of AI quality and trustworthiness and to make them implementable and testable using concrete methods. DFKI is working on:

- a quality platform that will provide recommendations for specific technologies during the development of concrete AI systems to improve their trustworthiness.

- a test platform that will make aspects of trustworthiness measurable and detectable using technical testing tools.

Five specific applications in the field of medicine, which are being developed by DFKI in cooperation with application partners, will ultimately be used to test the developed methods. In addition, DFKI is making an important contribution to building a strong regional AI community as part of the Innovation and Quality Centre (IQZ) Kaiserslautern, which it operates together with MISSION KI. The focus here is on building the expertise of companies and founders by transferring knowledge from cutting-edge AI research.

CERTAIN: Guarantees as path towards trust

Trust in AI is not created by regulation and framework conditions alone, but by verifiable guarantees. In this context, DFKI is working through CERTAIN on a Europe-wide initiative for the validation and certification of trustworthy AI systems.

‘CERTAIN is focused on developing certification of trustworthy AI systems. While the IQZ supports companies and industry with practical quality testing, advice and capacity building, CERTAIN is focusing on networking researchers across Europe, conducting methodological basic research and developing ethical guidelines for the responsible use of AI.’

This includes extensive testing under a range of conditions to simulate scenarios that could arise in the real world. CERTAIN therefore takes a multidimensional approach:

- Guarantees by Design: AI models are equipped from the outset with mechanisms that ensure their reliability.

- Guarantees by Tools: Testing tools enable targeted testing of AI systems under different conditions.

- Guarantees by Insight: Transparency is promoted by visualisation and analysis methods that enable an understanding of how a system works.

- Guarantees by Interaction: Human feedback is actively integrated into the AI learning process to adapt systems to societal expectations.

HumanE-AI.net: human-centric AI as a differentiating factor

While the economic viability of AI is the main focus in other parts of the world, Europe is pursuing a decidedly human-centric approach. This is also evident in the HumanE-AI.net initiative, which aims to design AI systems as supportive, non-replacement technology. AI should function as a ‘cognitive exoskeleton’ – not as an autonomous decision-maker, but as an intelligent assistance system that supports people in complex situations.

Projects such as HumanE-AI.net are investigating how these requirements can be translated into technical standards to systematically strengthen trust.

VeryHuman: trustworthy robotics through formal verification

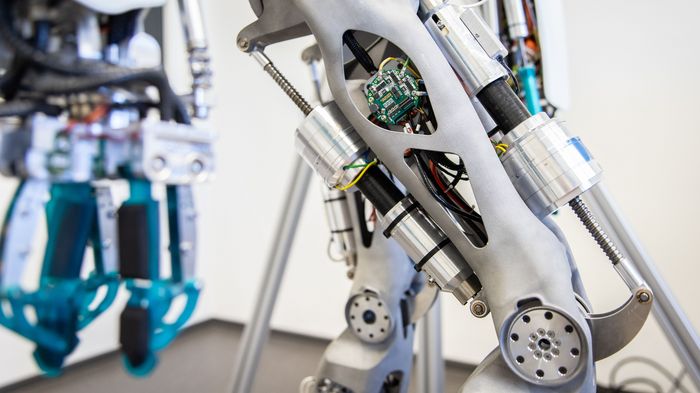

An essential prerequisite for trustworthy AI is the ability to provide robust guarantees for its behaviour – especially in robotics, where autonomous systems interact directly with humans. The VeryHuman research project is pursuing this approach with a hybrid verification model that combines mathematical modelling with AI methods to ensure the safety and traceability of humanoid robots.

‘One of the main objectives of VeryHuman is the mathematical modelling and verification of the motion behaviour of humanoid robots. Unlike stationary machines or classic industrial robots, their locomotion requires a highly complex control system with numerous degrees of freedom and varying environmental conditions.’

The core problem here is obtaining real training data. Classic AI models require large amounts of data to make reliable predictions. However, humanoid robots are not able to perform millions of motion sequences autonomously – whether due to physical limitations or safety risks. Therefore, VeryHuman combines simulations with formal methods from control theory to create an abstract model of walking behaviour. This approach provides mathematical prove that a robot will behave in a safe and stable manner in any situation.

The work of the DFKI researchers thus establishes the methodological basis for giving formal guarantees for the behaviour of robots – a central prerequisite for their safe integration into industrial and everyday applications. By mathematically modelling and abstracting complex motion sequences, VeryHuman can prove that robots adapt their behaviour in a systematic and predictable way. In the long term, this approach could pave the way for standardised certification of humanoid robots – comparable to a TÜV seal for autonomous systems. In this way, VeryHuman is making an important contribution to creating trustworthy AI that is not only technologically powerful but also verifiable, safe and socially acceptable.

CRAI: AI real-world laboratory for medium-sized companies

Developing trustworthy AI is a major challenge, especially for small and medium-sized enterprises (SMEs). While regulatory requirements are becoming increasingly precise, many companies lack the technical and financial resources to meet these requirements. The research project CRAI (AI regulatory sandbox for medium-sized businesses) addresses this problem by providing practical and scientifically sound support for SMEs. CRAI brings together stakeholders from science, business and regulation to jointly develop, test and optimise trustworthy AI systems for use by medium-sized businesses.

‘Regulatory learning is a key principle of CRAI. In collaboration with companies, scientists and regulators, we are developing practical guidelines for the AI Act and Data Act in a real-world laboratory – and are playing a pioneering role in Europe in designing future-proof regulations for artificial intelligence.’

This approach ensures that SMEs are familiarised with regulatory requirements at an early stage and can integrate them into their development processes (‘compliance by design’). At the same time, regulators benefit from the insights gained from real-world application, enabling iterative adaptation of the legal framework to technological developments.

In cooperation with medium-sized companies, reference solutions and prototypes are developed that offer a balance between technical performance, regulatory compliance and economic viability. The developed models are evaluated in terms of their transparency, explainability and fairness and can serve as blueprints for similar applications.

With its strong focus on application, CRAI complements existing DFKI activities in the field of trustworthy AI by supporting companies throughout the entire AI development cycle. In addition to scientific publications and best practice guidelines, the project will develop a platform that offers companies access to training materials, evaluation tools and certified AI models. In this way, CRAI makes a decisive contribution to the sustainable digitalisation and competitiveness of German SMEs.

Trustworthy AI as the European innovation model

Trustworthy AI is not a static state, but a continuous development process that combines scientific excellence with social responsibility. To promote sustainable acceptance, a close dialogue between research, industry, politics and society is needed. The methods developed at DFKI for certification, formal verification and regulatory support offer a pioneering contribution to the safe and ethical use of AI.

Europe has the opportunity to take a global leadership role with a human-centred AI approach. However, this requires a consistent combination of technological innovation, regulatory foresight and social acceptance. Projects such as MISSION KI, CERTAIN, HumanE-AI.net, CRAI and VeryHuman demonstrate that the future of artificial intelligence is shaped not only by performance, but also by trust and responsibility.

Gemeinsam die Zukunft der vertrauenswürdigen KI gestalten

The development and implementation of trustworthy AI requires close collaboration between science, industry and politics. We invite companies, public institutions and political decision-makers to actively participate in the design of secure, transparent and powerful AI technologies. Whether through joint research projects, the transfer of scientific findings into practice or the development of new testing and certification procedures – let us work together to enshrine trust as a guiding principle of AI development..

Interested companies, research institutions and political stakeholders can contact DFKI for further information or to request cooperation.