Motivation

Focused research and development in the field of Deep Learning, especially in recent years, has resulted in a variety of different network types, architectures, modules, training methods and data sets. Nevertheless, it is still a challenging task to build and configure a state-of-the-art Deep Learning System for certain visual recognition tasks. Typically, such a task starts with choosing an appropriate network architecture and network parameters and continues with the challenging exploitation of the multi-modal nature of input data, which in many cases contains not only visual information, but also sound and motion or text. Although deep learning approaches offer significant improvements in processing individual modalities, it is desirable to use all available modalities in real-world systems. The end-to-end approach of Deep Learning combines feature extraction and classification into a single step, which is why the traditional fusion concepts (Early Fusion, Late Fusion) need to be revisited. Currently, one of the questions that arises is: How and when are multiple modalities of an input signal (e.g., containing video data, visual, motion and acoustic information, text or knowledge) merged? Furthermore, it is currently completely open how static or dynamic external context information (e.g. special domain knowledge or eye-tracking information) can be fed to deep learning systems.

Project content

DeFuseNN focuses on three challenges for Deep Learning and defines the following areas of activity to address them:

- "Building a knowledge base" to improve the understanding of the Deep Learning landscape

- Investigation and development of new "multi-modal fusion concepts" for deep learning

- Use of additional "external signals" to improve classification

Building a knowledge base

The goal of this task area is to provide an overview of which tasks can be solved by which types of deep neural networks, and which architectures and their configuration (e.g. layers, training parameters, blocks) are suitable.

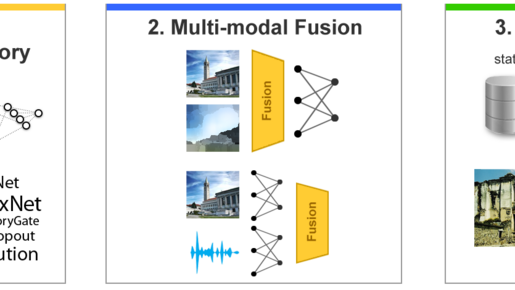

Multi-modal fusion

The goal of this task area is to develop fusion approaches that fit the underlying deep neural network architectures. For example, early fusion can often be regarded as already built into CNNs, since they process the R, G, B channels as independent input signals. In contrast to Early Fusion, Late Fusion can be regarded as a combination of two networks of the same network type (e.g. CNN). Here the fully connected layers can serve as the late fusion layer. In addition, new fusion layers that can be placed within the networks will be developed and investigated in the course of the project. These layers can fuse signals from sub-networks during the feed-forward mode (in-fusion). However, combinations can also run in parallel, e.g., the simultaneous processing of sound and image content. Such constellations often require synchronized or interwoven connections.

External Signals

Neural networks process an input by processing the signal through a series of layers. For such compositions, the use of context as an external signal is considered to combine formal knowledge with statistical learning.

Benefits and utilization

The DeFuseNN project will provide results on several current scientific questions in the field of Deep Learning. For the first time, the knowledge base created will provide an overview of the rapidly developing field of research in order to be able to derive solutions from existing problems using deep learning approaches. In addition, novel fusion concepts will be developed and investigated, which make new problems accessible to Deep Learning. In addition, the work in DeFuseNN will form the basis for further research on the understanding of Deep Learning. The results of DeFuseNN are scientifically shared through publications at renowned conferences and collaborations with other scientific institutes. Economically, the work in DeFuseNN provides opportunities for utilization with established national and international cooperation partners by transferring the basic concepts into marketable solutions.