What Is SmartKom?

There is a growing need for more intuitive and efficient interfaces to computing devices ? especially for users with limited technical experience or physical disabilities and in mobile or time-critical interaction. SmartKom is a multimodal dialog system that combines speech, gesture, and facial expressions for input and output. Understanding of spontaneous speech is combined with video-based recognition of natural gestures. SmartKom features the situated understanding of possibly imprecise, ambiguous, or incomplete multimodal input and the generation of coordinated, cohesive, and coherent multimodal presentations. SmartKom represents, reasons about, and exploits models of the user, the domain, the task, the context, and the media itself. One of the major scientific goals of SmartKom is to explore and design new computational methods for the seamless integration and mutual disambiguation of multimodal input and output on semantic and pragmatic levels.

What Are the Goals of SmartKom?

SmartKom aims to exploit one of the major characteristics of humanhuman interactions: the coordinated use of different code systems such as language, gesture, and facial expressions for interaction in complex environments. SmartKom employs a mixed-initiative approach to allow intuitive access to knowledge-rich services. SmartKom merges three user interface paradigms ? spoken dialog, graphical user interfaces, and gestural interaction ? to achieve truly multimodal communication. Natural language interaction in SmartKom is based on speaker-independent speech understanding technology. For the graphical user interface and the gestural interaction SmartKom does not use a traditional WIMP (windows, icons, menus, pointer) interface; instead, it supports the natural use of gestures. SmartKom?s interaction style breaks radically with the traditional desktop metaphor. The multimodal system is based on the situated delegation-oriented dialog paradigm (SDDP): The user delegates a task to a virtual communication assistant, visible on the graphical display. With more complex tasks, this delegation cannot be achieved in a simple command-and-control style. In a collaborative dialog between the user and the system ? which is represented by a life-like character ? specifications of the delegated task and of possible plans are worked out. In contrast to task-oriented dialogs, in which the user carries out a task with the help of the system, with SDDP the user delegates a task to an agent and helps the agent, where necessary, in the execution of the task.

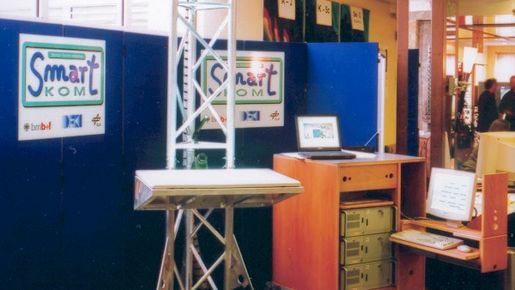

How Does SmartKom Look in Practice?

Three versions of SmartKom have been defined: SmartKom-Public (see the front page) is a multimodal communication kiosk for airports, train stations, or other public places where people may seek information on tourist sites or facilities such as movie theaters. Users can also access their personalized standard applications via wideband channels. SmartKom-Mobile uses a PDA as a front end. It can be added to a car navigation system or carried by a pedestrian. Additional services like route planning and interactive navigation through a city can be accessed via GPS connectivity. SmartKom-Home realizes a multi-modal portal to information services. It provides electronic program guides (EPG) for TV, controls consumer electronics devices like VCRs, and accesses standard applications like phone and e-mail. The system is accessed from home through a portable webpad: The user operates SmartKom-Home either in lean-forward mode, with coordinated speech and gestural interaction, or in lean-back mode, with voice input alone.

What Are the Key Features of the Demonstrators?

In the current fully operational SmartKom demonstrators, released in June 2003, the user can combine spontaneous speech and natural hand gestures. SmartKom responds with coordinated speech, gestures, graphics, and facial expressions of the personalized interface agent. An LCD projector and a gesture recognition unit (SIVIT) are located at the top of the demonstrator. Graphical output is presented onto a horizontal surface. The user is located in front of this virtual touch screen. She can use her hands and fingers to point to the visualized objects. There is no need to touch the surface, since the video-based gesture recognition unit tracks the location of the users hand and fingers. The state of the user is taken into account by an integrated interpretation of facial expressions and prosody. The demonstrators cover nine exemplary applications with nearly fifty functionalities.

How Does SmartKom Work?

SmartKom is based on a multi-blackboard architecture with parallel processing threads that allow a high degree of flexibility. The system operates on three dual Pentium PCs, running Windows 2000 or Linux. All modules ? such as media fusion and media design ? are realized as separate processes on distributed computers. Each module is implemented in C, C++, Java, or Prolog. A key design decision concerned the development of an XML-based markup language called M3L for the representation of all of the information that flows between the various processsing components of SmartKom. For example, the word lattice, the gesture lattice, the media fusion results, the presentation plan, and the discourse context are all represented in M3L. M3L is designed for the representation and exchange of complex multimodal content, of information about segmentation, and synchronization, and of information about confidence in processing results. For each communication blackboard, XML schemata allow for automatic data checking during data exchange. The media fusion component merges the output from speech interpretation and gesture analysis. Its results are passed to the intention recognition module and augmented with discourse and world knowledge. On the basis of the recognized intention, the action planner initiates an appropriate reaction. If necessary, it contacts external services via the function modelling interface. Finally, it invokes the presentation planner to select the appropriate output modalities. The presentation planner activates the language generator and the speech synthesizer. Since in the situated delegation-oriented dialog paradigm (SDDP) a life-like character interacts with the user, the output modalities have to be synchronized so as to ensure a coherent and natural communication experience. One example is the synchronization of the lip movements of Smartakus with the speech signal.

What Are the Salient Characteristics of SmartKom?

- Seamless integration and mutual disambiguation of multimodal input and output on semantic and pragmatic levels

- Situated understanding of possibly imprecise, ambiguous, or incomplete multimodal input

- Context-sensitive interpretation of dialog interaction on the basis of dynamic discourse and context models

- Adaptive generation of coordinated, cohesive, and coherent multimodal presentations

- Semi- or fully automatic completion of user-delegated tasks through the integration of information services

- Intuitive personification of the system through a presentation agent

What Are the SmartKom Results?

Scientific impact

- publications: 255

- academic degrees: 66

- appointments: 6

Economic impact

- 52 registered patents

- 29 spin-off products

- 6 spin-off companies

?Therewith, SmartKom is the most successful research project of all 29 leadprojects started since 1998 by the German Federal Ministry of Education and Reseach.? Dr. Bernd Reuse, head of the software systems division of BMBF

Partners

- DFKI GmbH (Konsortialleitung)

- DaimlerChrysler AG

- European Media Laboratory GmbH

- Friedrich-Alexander-Universität Erlangen-Nürnberg

- International Computer Science Institute

- Ludwig-Maximilians-Universität München

- MediaInterface Dresden GmbH

- Philips Speech Processing

- Siemens AG

- Sony International (Europe) GmbH

- Sympalog Voice Solutions GmbH

- Universität Stuttgart