Open-Source

Das DFKI stellt viele seiner Entwicklungen als Open Source bereit. Entdecken Sie hier eine Auswahl unserer frei zugänglichen Softwareprojekte und Datensätze, die Sie auch auf Plattformen wie GitHub oder HuggingFace finden.

Ophthalmo-CDSS – ein klinisches Entscheidungsunterstützungssystem

Das Ziel von Ophthalmo-AI ist es, durch die effektive Zusammenarbeit von maschinellem und menschlichem Fachwissen (Interactive Machine Learning – IML) eine bessere Entscheidungshilfe für Diagnose und Therapie in der Augenheilkunde zu entwickeln. Das DFKI strebt an, klinische Leitlinien und das Wissen von Medizinern (Expertenwissen bzw. menschliche Intelligenz) interaktiv mit maschinellem Lernen (künstliche Intelligenz) in einem sogenannten Augmented-Intelligence-System in den Diagnoseprozess zu integrieren.

SphereCraft – ein Datensatz für die Erkennung und Zuordnung sphärischer Keypoints sowie die Schätzung der Kameraposition

Ein Datensatz, der speziell für die Erkennung und Zuordnung von sphärischen Keypoints sowie die Schätzung der Kameraposition entwickelt wurde. Er behebt die Einschränkungen bestehender Datensätze, indem er extrahierte Keypoints aus gängigen handgefertigten und lernbasierten Detektoren zusammen mit ihren Ground-Truth-Entsprechungen bereitstellt. Er enthält synthetische Szenen mit fotorealistischer Darstellung und genauen Tiefenkarten und 3D-Meshes sowie reale Szenen, die mit verschiedenen sphärischen Kameras aufgenommen wurden. SphereCraft ermöglicht die Entwicklung und Bewertung von Algorithmen und Modellen für mehrere Kameraperspektiven mit dem Ziel, den Stand der Technik bei Computer-Vision-Aufgaben mit sphärischen Bildern voranzubringen.

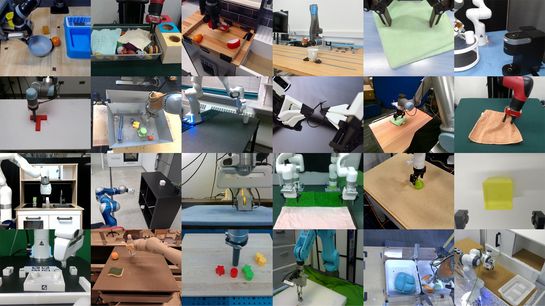

Open X-Embodiment – zielt darauf ab, alle Open-Source-Roboterdaten in einem einheitlichen Format bereitzustellen, um die weitere Nutzung zu vereinfachen

Wir stellen den Open X-Embodiment Dataset vor, den bislang größten Open-Source-Datensatz für reale Roboter. Er enthält über 1 Million reale Roboterbahnen aus 22 Roboterausführungen, von einzelnen Roboterarmen bis hin zu zweihändigen Robotern und Vierbeinern. Der Datensatz wurde durch die Zusammenführung von 60 bestehenden Roboterdatensätzen aus 34 Roboterforschungslabors weltweit erstellt. Unsere Analyse zeigt, dass die Anzahl der visuell unterschiedlichen Szenen gut auf verschiedene Roboterembodiments verteilt ist und dass der Datensatz eine breite Palette gängiger Verhaltensweisen und Haushaltsgegenstände umfasst.

pytransform3d – eine Python-Bibliothek für Transformationen in drei Dimensionen

pytransform3d bietet verschiedene Funktionen zur Arbeit mit Transformationen, darunter Operationen wie Verkettung und Inversion für gängige Darstellungen von Rotation und Translation sowie deren Konvertierung. Es stellt eine klare Dokumentation der Transformationskonventionen bereit und ermöglicht durch die enge Kopplung mit matplotlib eine schnelle Visualisierung oder Animation.

CrossQ – Batch-Normalisierung im Deep Reinforcement Learning für höhere Sampleeffizienz und Einfachheit

Die Sampleeffizienz ist ein entscheidendes Problem beim Deep Reinforcement Learning. Neuere Algorithmen wie REDQ und DroQ haben einen Weg gefunden, die Sampleeffizienz zu verbessern, indem sie das Verhältnis von Update zu Daten (UTD) auf 20 Gradientenaktualisierungsschritte pro Critic-per-Environment-Sample erhöht haben. Dies geht jedoch zu Lasten eines stark erhöhten Rechenaufwands. Um diese Rechenlast zu reduzieren, führen wir CrossQ ein: einen leichtgewichtigen Algorithmus für kontinuierliche Steuerungsaufgaben, der Batch-Normalisierung sorgfältig einsetzt und Zielnetzwerke entfernt, um den aktuellen Stand der Technik in Bezug auf die Sampleeffizienz zu übertreffen und gleichzeitig ein niedriges UTD-Verhältnis von 1 beizubehalten. Bemerkenswert ist, dass CrossQ nicht auf fortschrittliche Bias-Reduktionsschemata angewiesen ist, die in aktuellen Methoden verwendet werden.

RoBivaL Datenkorpus

Im Rahmen des RoBivaL-Projekts werden verschiedene Roboterfortbewegungskonzepte aus der Weltraumforschung und landwirtschaftlichen Anwendungen anhand von Experimenten unter landwirtschaftlichen Bedingungen miteinander verglichen. Die gewonnenen Erkenntnisse sollen den Technologie- und Wissenstransfer zwischen Weltraum- und Agrarforschung fördern. Um diesen Transfer weiter zu stärken, werden die aufgezeichneten Testdaten in einer standardisierten Testdatenbank gespeichert, die im Rahmen von RoBivaL entwickelt wurde, und für zukünftige Forschungszwecke zur Verfügung gestellt.

Thermostat – eine umfangreiche Sammlung von Erläuterungen zu NLP-Modellen und begleitenden Analysewerkzeugen

Kombiniert Erklärbarkeitsmethoden aus der Captum-Bibliothek mit den Datensätzen und Transformatoren von Hugging Face. Verringert die wiederholte Ausführung gängiger Experimente im Bereich Explainable NLP und reduziert so die Umweltbelastung und finanzielle Hindernisse. Erhöht die Vergleichbarkeit und Reproduzierbarkeit von Forschungsergebnissen bei Verringerung des Implementierungsaufwands.

Motion-Capture-Daten für die Darstellung von Handbewegungen

Ein Datensatz menschlicher Manipulationsaktionen, der mit einem Motion-Capture-System aufgezeichnet wurde. Zur Aufzeichnung der Daten wurde ein Qualisys-Motion-Capture-System verwendet. Wir haben sowohl die Bewegungen einzelner Finger als auch die Position und Ausrichtung der rechten Hand verfolgt. Einige Aufzeichnungen enthalten zusätzliche Marker am Rücken, an der Schulter und am Ellbogen.

MARS – Machina Arte Robotum Simulans

MARS ist ein plattformunabhängiges Simulations- und Visualisierungstool, das für die Robotikforschung entwickelt wurde. Es besteht aus einem Kernframework, das alle wichtigen Simulationskomponenten enthält: eine GUI (basierend auf Qt), eine 3D-Visualisierung (unter Verwendung von Open Scene Graph) und eine Physik-Engine (basierend auf ODE). MARS wurde modular konzipiert und kann sehr flexibel eingesetzt werden; so kann beispielsweise die Physiksimulation ohne Visualisierung und GUI gestartet werden. Es ist auch möglich, MARS durch die Erstellung eigener Plugins zu erweitern und damit neue Funktionen hinzuzufügen.

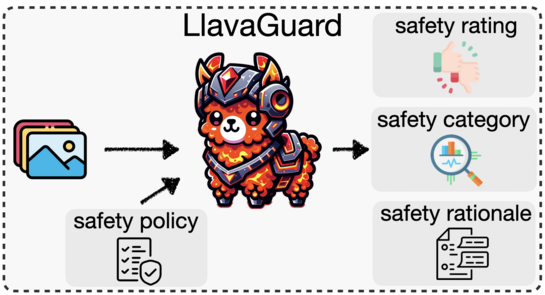

LlavaGuard – VLM-basierte Sicherheitsvorkehrungen für die Kuratierung von Bilddatensätzen und die Sicherheitsbewertung

Wir stellen LlavaGuard vor, eine Familie von VLM-basierten Schutzmodellen, die einen vielseitigen Rahmen für die Bewertung der Sicherheitskonformität visueller Inhalte bieten. Insbesondere haben wir LlavaGuard für die Annotation von Datensätzen und den Schutz generativer Modelle entwickelt. Zu diesem Zweck haben wir einen hochwertigen visuellen Datensatz mit einer umfassenden Sicherheitstaxonomie gesammelt und annotiert, den wir zur Feinabstimmung von VLMs auf kontextbezogene Sicherheitsrisiken verwenden. Eine wichtige Neuerung ist, dass die Antworten von LlavaGuard umfassende Informationen enthalten, darunter eine Sicherheitsbewertung, die verletzten Sicherheitskategorien und eine ausführliche Begründung. Darüber hinaus ermöglichen unsere eingeführten anpassbaren Taxonomiekategorien die kontextspezifische Anpassung von LlavaGuard an verschiedene Szenarien.